Audio for Communication and Environment Perception (CoTeSys Project #428)

Project Overview

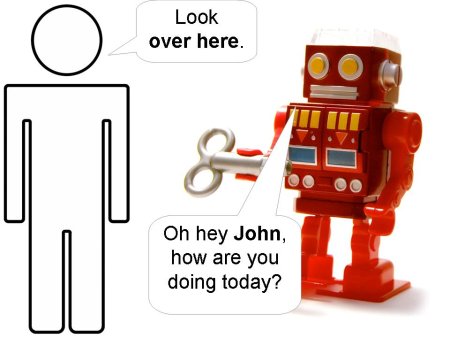

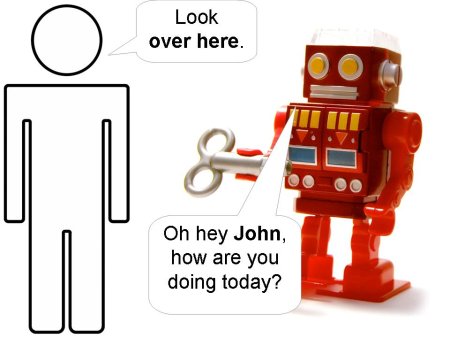

AudiComm focusses on high- and low-level sound and speech processing. The main goals of the project are the implementation of methods to find out

who is speaking,

where the speaker is located, and

what the speaker is saying. Our approaches for

sound localization,

speaker identification, and

speech processing will enable robots to communicate with humans in more natural ways.

Currently, the project has implemented a first software that integrates sound localization using generalized cross correlation, speaker identification that is based on Gaussian mixture models, and a speech processing and language grounding component that is based on combinatory categorial grammar.

In the future, AudiComm will develop an

acoustic environment map that represents audible events and can be used for human-robot interaction, for example for symbol grounding or to combine the classification results from visual sensors with AudiComm results. In the acoustic environment map the project plans to integrate not only information from sound localization and speaker identification, but also results from other sound classifiers, including verbal / nonverbal classification.

News

AudiComm participated in the CoTeSys workshop which took place October 29 - October 30, 2009. You can download the AudiComm poster presentation here:

AudiComm CoTeSys poster

People and Partners

Acknowledgement

This ongoing work is supported by the DFG excellence initiative research cluster Cognition for Technical Systems

CoTeSys, see

www.cotesys.org for further details.

Publications

|

[1]

|

Manuel Giuliani, Claus Lenz, Thomas Müller, Markus Rickert, and Alois

Knoll.

Design principles for safety in human-robot interaction.

International Journal of Social Robotics, 2(3):253-274, 2010.

[ DOI |

.bib |

.pdf ]

|

AudiComm focusses on high- and low-level sound and speech processing. The main goals of the project are the implementation of methods to find out who is speaking, where the speaker is located, and what the speaker is saying. Our approaches for sound localization, speaker identification, and speech processing will enable robots to communicate with humans in more natural ways.

Currently, the project has implemented a first software that integrates sound localization using generalized cross correlation, speaker identification that is based on Gaussian mixture models, and a speech processing and language grounding component that is based on combinatory categorial grammar.

In the future, AudiComm will develop an acoustic environment map that represents audible events and can be used for human-robot interaction, for example for symbol grounding or to combine the classification results from visual sensors with AudiComm results. In the acoustic environment map the project plans to integrate not only information from sound localization and speaker identification, but also results from other sound classifiers, including verbal / nonverbal classification.

AudiComm focusses on high- and low-level sound and speech processing. The main goals of the project are the implementation of methods to find out who is speaking, where the speaker is located, and what the speaker is saying. Our approaches for sound localization, speaker identification, and speech processing will enable robots to communicate with humans in more natural ways.

Currently, the project has implemented a first software that integrates sound localization using generalized cross correlation, speaker identification that is based on Gaussian mixture models, and a speech processing and language grounding component that is based on combinatory categorial grammar.

In the future, AudiComm will develop an acoustic environment map that represents audible events and can be used for human-robot interaction, for example for symbol grounding or to combine the classification results from visual sensors with AudiComm results. In the acoustic environment map the project plans to integrate not only information from sound localization and speaker identification, but also results from other sound classifiers, including verbal / nonverbal classification.

AudiComm participated in the CoTeSys workshop which took place October 29 - October 30, 2009. You can download the AudiComm poster presentation here: AudiComm CoTeSys poster

AudiComm participated in the CoTeSys workshop which took place October 29 - October 30, 2009. You can download the AudiComm poster presentation here: AudiComm CoTeSys poster