Joint-Action for Humans and Industrial Robots (CoTeSys Project #328)

- Joint-Action for Humans and Industrial Robots (CoTeSys Project #328)

- Project Overview

- Public Demonstrations

- Project Progress

- Videos

- The Cognitive Factory Scenario - An Overview Video

- Task-based robot controller

- Internal 3D representation

- Learning a new building plan and Execution of previously learned building plans

- Kinect enabled robot workspace surveillance

- Interactive human-robot collaboration with Microsoft Kinect

- Fusing multiple Kinects to survey shared Human-Robot-Workspaces

- People and Partners

- Acknowledgement

- Publications

Project Overview

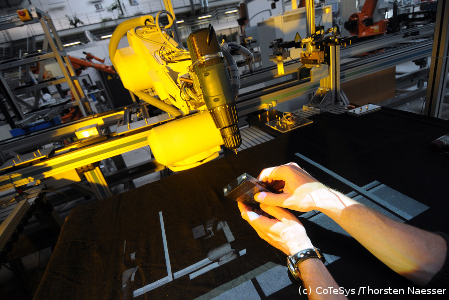

JAHIR aims to integrate industrial robots in a human-dominated working area in industrial settings, so that humans and robots can naturally cooperate on a true peer-to-peer level. To enable a cooperation on this level, the project focuses on the observation and understanding of non-verbal communication channels from the human partner based on advanced computer vision algorithms as well as other measurable cues. Unlike in usual fixed set-ups, the environmental conditions in production areas are heavily unconstrained, including crossing workers, moving machines and variable lighting conditions. Therefore, a stable preprocessing of the visual input signals is required to select only objects or image apertures, which are relevant and reasonable for joint action.

JAHIR aims to integrate industrial robots in a human-dominated working area in industrial settings, so that humans and robots can naturally cooperate on a true peer-to-peer level. To enable a cooperation on this level, the project focuses on the observation and understanding of non-verbal communication channels from the human partner based on advanced computer vision algorithms as well as other measurable cues. Unlike in usual fixed set-ups, the environmental conditions in production areas are heavily unconstrained, including crossing workers, moving machines and variable lighting conditions. Therefore, a stable preprocessing of the visual input signals is required to select only objects or image apertures, which are relevant and reasonable for joint action.

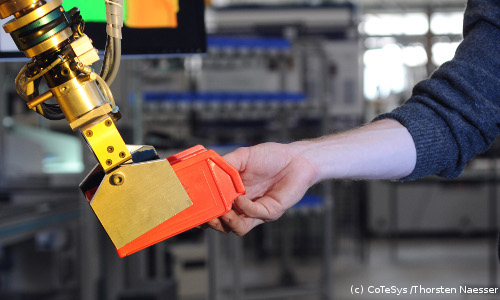

The integration of various sensors, e.g. cameras and force/torque sensors, is needed for interaction scenarios such as the handing over of various parts during the assembly. A comprehensive task knowledge and its general representation is required to make the robot an equal partner in the assembly process based on sophisticated learning and action planing strategies. The robot needs to react to sensor input in real time to avoid collisions, which requires advanced on-line motion and movement planning. Additionally, an intelligent safety system is necessary to ensure the physical security of the human worker, going beyond state-of-the-art systems that slows down the robot or stops it if the human worker comes close to it.

The demonstration platform of JAHIR is integrated in the Cognitive Factory - one of the main demontration set-ups of CoTeSys (Cognition for Technical Systems) between fully automated and fully manual production processes.

The multiple and challenging scientific aspects of JAHIR can only be investigated with a rich set of interdisciplinary research activities. Therefore, electrical and mechanical engineers and computer scientists work together in JAHIR supported by psychologists that have used and use JAHIR as experimental stage.

The integration of various sensors, e.g. cameras and force/torque sensors, is needed for interaction scenarios such as the handing over of various parts during the assembly. A comprehensive task knowledge and its general representation is required to make the robot an equal partner in the assembly process based on sophisticated learning and action planing strategies. The robot needs to react to sensor input in real time to avoid collisions, which requires advanced on-line motion and movement planning. Additionally, an intelligent safety system is necessary to ensure the physical security of the human worker, going beyond state-of-the-art systems that slows down the robot or stops it if the human worker comes close to it.

The demonstration platform of JAHIR is integrated in the Cognitive Factory - one of the main demontration set-ups of CoTeSys (Cognition for Technical Systems) between fully automated and fully manual production processes.

The multiple and challenging scientific aspects of JAHIR can only be investigated with a rich set of interdisciplinary research activities. Therefore, electrical and mechanical engineers and computer scientists work together in JAHIR supported by psychologists that have used and use JAHIR as experimental stage.

Public Demonstrations

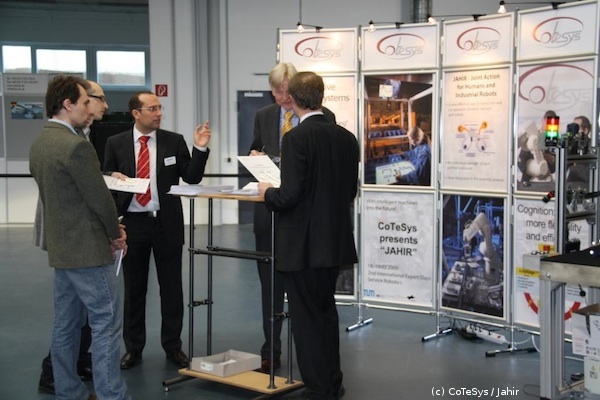

The members of JAHIR successfully presented their demonstration platform and research results during several major public events including the AUTOMATICA 2008, Münchener Kolloquium 2008, 1st CoTeSys Workshop for Industry 2008, Münchener Kolloquium 2009, the Schunk Expert Days 2009, and CARV 2009. The JAHIR -demonstrator has moreover been demonstrated at several TUM events and demonstrations for external guests from other research institutes and industry, e.g. Festo, BMW, Schunk, Reis Robotics, KUKA. JAHIR sparked the interest of several industrial partners and paved the way for future cooperations with CoTeSys.Project Progress

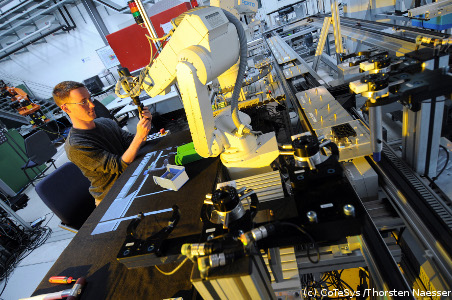

Throughout the runtime of the JAHIR project, the developed research platform has established itself as an ideal testbed for several scientific approaches as well as a stable showcasing system sparking industrial interest.

The functional goal of JAHIR is to bring human workers and industrial robots together so that they can safely share the same physical workspace. By bridging the gap between automated and manual production a novel hybrid assembly situation arises that allows highly adaptable manufacturing skills coupled with high precision.

In order to realize a system controller that enables a safe human-robot-interaction, several reliable real-time capable modules for input and output were integrated in the first steps. By a hand-in-hand collaboration of JAHIR team members with partners from the projects ACIPE, ItrackU and MuDiS several tools have been implemented for (human) worker and desktop surveillance, covering computer vision, depth-map, and laser scanner based perception approaches.

Throughout the runtime of the JAHIR project, the developed research platform has established itself as an ideal testbed for several scientific approaches as well as a stable showcasing system sparking industrial interest.

The functional goal of JAHIR is to bring human workers and industrial robots together so that they can safely share the same physical workspace. By bridging the gap between automated and manual production a novel hybrid assembly situation arises that allows highly adaptable manufacturing skills coupled with high precision.

In order to realize a system controller that enables a safe human-robot-interaction, several reliable real-time capable modules for input and output were integrated in the first steps. By a hand-in-hand collaboration of JAHIR team members with partners from the projects ACIPE, ItrackU and MuDiS several tools have been implemented for (human) worker and desktop surveillance, covering computer vision, depth-map, and laser scanner based perception approaches.

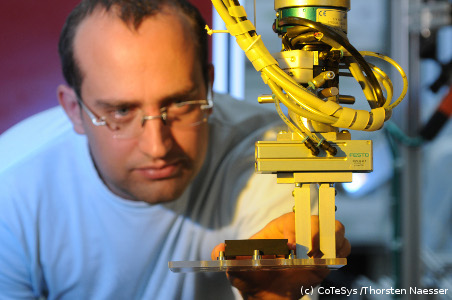

Furthermore, several appropriate non-standard output modules were mechanically installed on the hardware side as well as integrated to be interfaced from the software side, i.e. an articulated industrial 6-dof robot, a gripper changing system and the connected end effectors (e.g. drilling machine, pneumatic or electrical gripper, or glue gun). Worker guidance is integrated by visualizing information by a tabletop-mounted video projector. Actions on the mechanical side can be measured by a force-torque sensor.

To close the desired Perception-Cognition-Action loop, a cognition based system controller has been designed and implemented interfacing all perception and action components. The underlying data streaming technique is based on the Real-time Database for Cognitive Automobiles expanded by additional communication channels. The current action control strategy of JAHIR is referring to a dialog assisted assembly process. Starting from a finite state machine using the UniMod library, a plan defining all steps of the manufacturing process may either be programmed in advance in form of a JESS-oriented knowledge base or even taught to the system during runtime.

Furthermore, several appropriate non-standard output modules were mechanically installed on the hardware side as well as integrated to be interfaced from the software side, i.e. an articulated industrial 6-dof robot, a gripper changing system and the connected end effectors (e.g. drilling machine, pneumatic or electrical gripper, or glue gun). Worker guidance is integrated by visualizing information by a tabletop-mounted video projector. Actions on the mechanical side can be measured by a force-torque sensor.

To close the desired Perception-Cognition-Action loop, a cognition based system controller has been designed and implemented interfacing all perception and action components. The underlying data streaming technique is based on the Real-time Database for Cognitive Automobiles expanded by additional communication channels. The current action control strategy of JAHIR is referring to a dialog assisted assembly process. Starting from a finite state machine using the UniMod library, a plan defining all steps of the manufacturing process may either be programmed in advance in form of a JESS-oriented knowledge base or even taught to the system during runtime.

Another aspect was to enhance safety in the collaboration cell. Different safety sensors were installed in hardware and implemented in software. It is now possible to configure the shared workspace of the human and the robot. The human worker is detected and localized via sensor mats or PMD (Photonic Mixer Device as range map) camera. If the worker is too close to the robot, it will slow down.

Further scientific highlights arising from this project emphasized aspects like efficient, human-adapted robotic motion, safety requirements and psychological studies on human factors during a human-robot-interaction. For more details on these aspects please refer to the publications at the bottom of this page.

Another aspect was to enhance safety in the collaboration cell. Different safety sensors were installed in hardware and implemented in software. It is now possible to configure the shared workspace of the human and the robot. The human worker is detected and localized via sensor mats or PMD (Photonic Mixer Device as range map) camera. If the worker is too close to the robot, it will slow down.

Further scientific highlights arising from this project emphasized aspects like efficient, human-adapted robotic motion, safety requirements and psychological studies on human factors during a human-robot-interaction. For more details on these aspects please refer to the publications at the bottom of this page.

Videos

The Cognitive Factory Scenario - An Overview Video

The project JAHIR is embedded in the demonstration scenario "The Cognitive Factory". The video on the right shows an overview of the scenario and all integrated projects.Task-based robot controller

Direct physical human-robot interaction has become a central part in the research field of robotics today. To use the advantages of the potential for humans and robots to work together as a team in industrial settings, the most important issues are safety for the human and an easy way to describe tasks for the robot. In the next video, we present an approach of a hierarchical structured control of industrial robots for joint-action scenarios. Multiple atomic tasks including dynamic collision avoidance, operational position, and posture can be combined in an arbitrary order respecting constraints of higher priority tasks. The controller flow is based on the theory of orthogonal projection using nullspaces and constraint least-square optimization.Internal 3D representation

In this video one can see a visualization of the internal 3D representation used in the robotic controller to measure distances for the collision avoidance task. The 3D representation includes static and dynamic objects and is updated according to sensor data. Every sensor module can broadcast its information about the current status of unique identified objects in the workspace.Learning a new building plan and Execution of previously learned building plans

In this videos, we show how our industrial robot is instructed via speech input and that the JAHIR robot is following and executing a collaborative plan that was teached-in previously using multiple input modalities.Kinect enabled robot workspace surveillance

A human co-worker is tracked using a Microsoft Kinect in a human-robot collaborative scenario.Interactive human-robot collaboration with Microsoft Kinect

In this video a human is interacting with the robotic assistive system JAHIR to jointly perform assembly t asks. The human is tracked using a Microsoft Kinect. Virtual Buttons in the menu guided interaction allow a dynamic and adaptive way of controlling and interacting with the robotic system. The overlay video on the upper right shows a close up of the working desk with the on-table projection for the buttons, the virtual environment representation and the menu.Fusing multiple Kinects to survey shared Human-Robot-Workspaces

In today's industrial applications, robots are often strictly separated in space or time. Without surveillance of the joint workspace, a robot is unaware of unforeseen changes in its environment and can not react properly. Since robots are heavy and bulky machines, collisions may have severe consequences for the human worker. Thus, the work area must be monitored to recognize unknown obstacles in it. With the knowledge of its surroundings, the robot can be controlled to avoid collisions with any detected object. to enable direct collaboration between humans and industrial robots. Therefore, the environment is perceived by using multiple, distributed range sensors (Microsoft Kinect). The sensory data sets are decentrally pre-processed and broadcasted via the network. These data sets are then processed by additional components that segment and cluster unknown objects and publish the gained information to allow the system to react to unexpected events in the environment.People and Partners

Robotics and Embedded Systems

- Dipl.-Ing. Claus Lenz

Human-Machine Communication, Department of Electrical Engineering and Information Technologies

- Dipl.-Inf. Jürgen Blume

- Dipl.-Ing. Alexander Bannat

- Dr.-Ing. Frank Wallhoff

Machine Tools and Industrial Management, Department of Mechanical Engineering

- Dipl.-Ing. Wolfgang Rösel

Acknowledgement

This ongoing work is supported by the DFG excellence initiative research cluster Cognition for Technical Systems CoTeSys, see www.cotesys.org for further details.Publications

| [1] | Claus Lenz and Alois Knoll. Mechanisms and capabilities for human robot collaboration. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, pages 666-671, Edinburgh, Scotland, UK, 2014. [ DOI | .bib | .pdf ] |

| [2] | Markus Huber, Aleksandra Kupferberg, Claus Lenz, Alois Knoll, Thomas Brandt, and Stefan Glasauer. Spatiotemporal movement planning and rapid adaptation for manual interaction. PLoS ONE, 8(5):e64982, 2013. [ DOI | .bib | .pdf ] |

| [3] | Markus Huber, Claus Lenz, Cornelia Wendt, Berthold Färber, Alois Knoll, and Stefan Glasauer. Predictive mechanisms increase efficiency in robot-supported assembies: An experimental evaluation. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication, Gyengju, Korea, 2013. [ .bib | .pdf ] |

| [4] | Jürgen Blume, Alexander Bannat, Gerhard Rigoll, Martijn Niels Rooker, Alfred Angerer, and Claus Lenz. Programming concept for an industrial hri packaging cell. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication, Gyengju, Korea, 2013. [ .bib | .pdf ] |

| [5] | Aleksandra Kupferberg, Markus Huber, Bartosz Helfer, Claus Lenz, Alois Knoll, and Stefan Glasauer. Moving just like you: Motor interference depends on similar motility of agent and observer. PLoS ONE, 7(6):39637, 2012. [ DOI | .bib | .pdf ] |

| [6] | Claus Lenz, Markus Grimm, Thorsten Röder, and Alois Knoll. Fusing multiple kinects to survey shared human-robot-workspaces. Technical Report TUM-I1214, Technische Universität München, Munich, Germany, 2012. [ .bib | .pdf ] |

| [7] | Claus Lenz, Alice Sotzek, Thorsten Röder, Helmuth Radrich, Alois Knoll, Markus Huber, and Stefan Glasauer. Human workflow analysis using 3d occupancy grid hand tracking in a human-robot collaboration scenario. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, USA, 2011. [ DOI | .bib | .pdf ] |

| [8] | Claus Lenz. Context-aware human-robot collaboration as a basis for future cognitive factories. Dissertation, Technische Universität München, München, 2011. [ .bib | .pdf ] |

| [9] | Alexander Bannat, Thibault Bautze, Michael Beetz, Jürgen Blume, Klaus Diepold, Christoph Ertelt, Florian Geiger, Thomas Gmeiner, Tobias Gyger, Alois Knoll, Christian Lau, Claus Lenz, Martin Ostgathe, Gunther Reinhart, Wolfgang Rösel, Thomas Rühr, Anna Schuboe, Kristina Shea, Ingo Stork genannt Wersborg, Sonja Stork, William Tekouo, Frank Wallhoff, Mathey Wiesbeck, and Michael F. Zäh. Artificial cognition in production systems. IEEE Transactions on Automation Science and Engineering, PP(99):1-27, 2010. [ .bib | .pdf ] |

| [10] | Manuel Giuliani, Claus Lenz, Thomas Müller, Markus Rickert, and Alois Knoll. Design principles for safety in human-robot interaction. International Journal of Social Robotics, 2(3):253-274, 2010. [ DOI | .bib | .pdf ] |

| [11] | Markus Huber, Alois Knoll, Thomas Brandt, and Stefan Glasauer. When to assist? modelling human behaviour for hybrid assembly systems. In ISR - ROBOTIK 2010, Munich, Germany, 2010. [ .bib | .pdf ] |

| [12] | Gunther Reinhart and Wolfgang Rösel. Interaktiver Assistenzroboter in der Montage - Sicherheitsaspekte in der Mensch-Roboter-Kooperation: Interactive Robot-assistant in Production Environments - Safety Aspects in Human-Robot Co-operation. Zeitschrift für wirtschaftlichen Fabrikbetrieb (ZwF), 2(1):80-84, 2010. [ .bib ] |

| [13] | Frank Wallhoff, Jürgen Blume, Alexander Bannat, Wolfgang Rösel, Claus Lenz, and Alois Knoll. A skill-based approach towards hybrid assembly. Advanced Engineering Informatics, 24(3):329 - 339, 2010. The Cognitive Factory. [ DOI | .bib | .pdf ] |

| [14] | Alexander Bannat, Jürgen Gast, Tobias Rehrl, Gerhart Rigoll, Frank Wallhoff, Wolfgang Rösel, and Gunther Reinhart. A Multimodal Human-Robot-Interaction Scenario: Working together with an industrial robot. In Proceedings of the 13th International Conference on Human-Computer Interaction), San Diego, CA, USA, 2009. [ DOI | .bib | .pdf ] |

| [15] | Alexander Bannat, Jürgen Gast, Tobias Rehrl, Wolfgang Rösel, Gerhard Rigoll, and Frank Wallhoff. A Multimodal Human-Robot-Interaction Scenario: Working Together with an Industrial Robot. In J.A. Jacko, editor, Proceedings of the International Conference on Human-Computer Interaction, volume LNCS 5611, pages 303-311, San Diego, CA, USA, 2009. Springer. [ DOI | .bib | .pdf ] |

| [16] | Jürgen Gast, Alexander Bannat, Tobias Rehrl, Frank Wallhoff, Gerhard Rigoll, Cornelia Wendt, Sabrina Schmidt, Michael Popp, and Berthold Färber. Real-time Framework for Multimodal Human-Robot Interaction. In Proceedings of the IEEE 2nd Conference on Human-System-Interaction, Catania, Italy, 2009. [ DOI | .bib | .pdf ] |

| [17] | Jürgen Gast, Alexander Bannat, Tobias Rehrl, Gerhard Rigoll, Frank Wallhoff, Christoph Mayer, and Bernd Radig. Did I Get It Right: Head Gestures Analysis for Human-Machine Interactions. In J.A. Jacko, editor, Proceedings of the International Conference on Human-Computer Interaction, volume LNCS 5611, pages 170-177, San Diego, CA, USA, 2009. Springer. [ DOI | .bib | .pdf ] |

| [18] | Sabine Koll and Wolfgang Rösel. Kollege Roboter: Menschliche Maschinen heben leichter. Industrieanzeiger, 131(12):48-49, 2009. [ .bib | .pdf ] |

| [19] | Claus Lenz, Giorgio Panin, Thorsten Röder, Martin Wojtczyk, and Alois Knoll. Hardware-assisted multiple object tracking for human-robot-interaction. In Francois Michaud, Matthias Scheutz, Pamela Hinds, and Brian Scassellati, editors, HRI '09: Proceedings of the 4th ACM/IEEE international conference on Human robot interaction, pages 283-284, La Jolla, CA, USA, 2009. ACM. [ DOI | .bib | .pdf ] |

| [20] | Claus Lenz, Markus Rickert, Giorgio Panin, and Alois Knoll. Constraint task-based control in industrial settings. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 3058-3063, St. Louis, MO, USA, 2009. [ DOI | .bib | .pdf ] |

| [21] | Tobias Rehrl, Alexander Bannat, Jürgen Gast, Gerhard Rigoll, and Frank Wallhoff. cfHMI: A Novel Contact-Free Human-Machine Interface. In J.A. Jacko, editor, Proceedings of the International Conference on Human-Computer Interaction, volume LNCS 5611, pages 246-254, San Diego, CA, USA, 2009. Springer. [ DOI | .bib | .pdf ] |

| [22] | Wolfgang Rösel and Gunther Reinhart. Safety aspects in an industrial environment with human-robot interaction. In M. F. Zäh and H. A. ElMaraghy, editors, 3rd International Conference on Changeable, Agile, Reconfigurable and Virtual Production (CARV 2009). Utz, 2009. [ .bib ] |

| [23] | Frank Wallhoff, Alexander Bannat, Jürgen Gast, Tobias Rehrl, Moritz Dausinger, and Gerhard Rigoll. Statistics-Based Cognitive Human-Robot Interfaces for Board Games - Let's play! In Jacko, editor, Proceedings of the International Conference on Human-Computer Interaction, volume LNCS 5611, pages 708-715, San Diego, CA, USA, 2009. Springer. [ .bib | .pdf ] |

| [24] | Michael Zäh and Wolfgang Rösel. Safety Aspects in a Human-Robot Interaction Scenario: A Human Worker is Co-operating with an Industrial Robot. In Advances in Robotics: FIRA RoboWorld Congress, Conference on Social Robotics, Incheon, Korea, 2009. [ DOI | .bib ] |

| [25] | Alexander Bannat, Jürgen Gast, Gerhard Rigoll, and Frank Wallhoff. Event analysis and interpretation of human activity for augmented reality-based assistant systems. In IEEE Proceeding ICCP 2008, Cluj-Napoca, Romania, 2008. [ .bib | .pdf ] |

| [26] | Markus Huber, Claus Lenz, Markus Rickert, Alois Knoll, Thomas Brandt, and Stefan Glasauer. Human preferences in industrial human-robot interactions. In Proceedings of the International Workshop on Cognition for Technical Systems, Munich, Germany, 2008. [ .bib | .pdf ] |

| [27] | Claus Lenz, Suraj Nair, Markus Rickert, Alois Knoll, Wolfgang Rösel, Jürgen Gast, and Frank Wallhoff. Joint-action for humans and industrial robots for assembly tasks. In Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication, pages 130-135, Munich, Germany, 2008. [ .bib | .pdf ] |

| [28] | Thomas Müller, Claus Lenz, Simon Barner, and Alois Knoll. Accelerating integral histograms using an adaptive approach. In Proceedings of the 3rd International Conference on Image and Signal Processing, Lecture Notes in Computer Science (LNCS), pages 209-217, Cherbourg-Octeville, France, 2008. Springer. [ DOI | .bib | .pdf ] |

| [29] | Frank Wallhoff, Jürgen Gast, Alexander Bannat, Stefan Schwärzler, Gerhard Rigoll, Cornelia Wendt, Sabrina Schmidt, Michael Popp, and Berthold Färber. Real-time framework for on- and off-line multimodal human-human and human-robot interaction. In Proceedings of the International Workshop on Cognition for Technical Systems, Munich, Germany, 2008. [ .bib | .pdf ] |

| [30] | Gunther Reinhart, Wolfgang Vogel, Wolfgang Rösel, Frank Wallhoff, and Claus Lenz. JAHIR - Joint action for humans and industrial robots. In Fachforum: Intelligente Sensorik - Robotik und Automation. Bayern Innovativ - Gesellschaft für Innovation und Wissenstransfer mbH, 2007. [ DOI | .bib | .pdf ] |

| [31] | Gunther Reinhart, William Tekouo, Wolfgang Rösel, Matthey Wiesbeck, and Wolfgang Vogl. Roboter gestützte kognitive Montagesysteme - Montagesysteme der Zukunft. wt Werkstattstechnik online, 97(9):689-693, 2007. [ .bib ] |

| [32] | Michael Zäh, Wolfgang Vogl, Christian Lau, Matthey Wiesbeck, and Martin Ostgathe. Towards the Cognitive Factory. 2nd International Conference on Changeable, Agile, Reconfigurable and Virtual Production, 2007. [ .bib | .pdf ] |