Supporting Cognitive Processes on Mobile Platforms Using Joint Geometry-Based and Image-Based Environment Modeling

VEM (Visual Environment Modeling) is a project supported in part within the DFG excellence initiative research cluster

Cognition for Technical Systems - CoTeSys. It aims towards a joint geometry and image-based environment modeling to provide a better understanding of a cognitive system's surroundings. All algorithms are exclusively based on visual data and so the robots only have to be equipped with cameras and are then able to build their own environment models.

First results of following tasks are already available:

- real-time image-based localization

- visual homing

- image-based environment modeling

- visual surprise trigger based on bayesian inference

Further work aims on following topics:

- robot-robot joint model generation

- robot-robot registration (time and space)

- segmentation of dynamic and static environment

- classification of the environment as stable or caotic

- generation of a model representing the variations in motion

- dynamic rendering of known objects

- robust rendering with changing lighting conditions

- exploring methods (next best view planning)

In the following we summarizes our work on visual localization, visual homing, image-based modeling and surprise detection. Experimental results show the applicability of our algorithms. For more technical and mathematical details, please refer to [1], [2].

Visual Localization

|

The first step in the generation of image-based models is the accurate localization of the captured images. Our real-time capable algorithm allows us to estimate the position and orientation of the camera during the acquisition of the image sequence. Since our method does not require any external references like for example artificial markers in the scene or the dimensions of a known object in the world, it makes our algorithm very flexible and suitable for a cognitive system navigating in real-world environments. Some novel key features of the framework are accelerated Kanade-Lucas-Tomasi (KLT) tracking, fast, sub-pixel accurate stereo matching, feature recovery and the intelligent feature set hand-off. The figure on the right shows a screenshot of the visual localization routine. The marks are the output of the KLT tracker and the localization result. |

Visual Homing

Homing is the so called problem of a robot to localize itself in a known environment. Whenever the robot is switched off and on again or the KLT tracking routine simply fails, e.g. due to a too fast turning rate, this problem arises. If the robot can not register its current position with respect to the acquired environment model, its whole history becomes useless. Therefore, a solution for this problem has to be provided in order to allow a robot to use an acquired model within more than one run. We developed two approaches which are again only based on images. In both variants a 3D landmark cloud is constructed. The difference between the two methods consists in how these landmarks are matched. The variants differ in robustness and accuracy. Which algorithm to use depends on the application and the scene.

Image-Based View Prediction

|

One type of image-based scene representation that recently has become very popular uses view-dependent geometry and texture. Instead of computing a global geometry model which is valid for any view point and viewing direction, the geometry of the scene is estimated locally and holds only for a small region in the viewpoint space. It has been shown that this approach is suitable especially when the scene contains specular and translucent objects. Hence, per-pixel depth maps are calculated for each reference image. While this is done off-line, the view selection and view synthesis are performed on-line. The view selection only chooses a small subset of all reference images which contribute to view interpolation, each time a new frame is rendered. In real-world environments most surfaces are non-Lambertian which means that the reflected intensity depends on the position of the viewer. Hence, in our approach, the reference cameras are ranked in terms of their orthogonal distance with respect to predefined rays within the viewing frustum of the virtual camera. Novel virtual views are synthesized in a two-pass procedure: the color data of the selected reference images is warped into the virtual view where afterwards the final color of each pixel is determined using probabilistic models.

The image on the right shows the virtual rendered scene with the camera positions of the acuired images. |

Surprise Detection

We proposed a scheme for Bayesian visual surprise detection based on the probabilistic concept for view synthesis. Similar to the processing of color information in the human visual system, we compute from each RGB reference image a luminance signal and two color opponency signals (red-green and blue-yellow), respectively. Thus, surprise detection does not have to be performed jointly in RGB-space but can be done independently in three decoupled pathways. The luminance samples warped into the virtual view from the reference images are assumed to be drawn from a Gaussian distribution. We put a prior distribution over the precision, which is equivalent to the reciprocal variance of the Gaussian distribution.

The Kullback-Leibler divergence (KLD) as the difference between the posterior distribution and the prior distribution serves as a quantitative measure for surprise. The KLD is evaluated for each pixel in the virtual image which yields a pixel-wise surprise trigger.

Results

Cognitive Household:

The episode we envision within the assistive household scenario is the acquisition of an image-based model of a typical household environment which is the basis for cognitive processes. With our module a cognitive robot in the household should be able to update its environment model at any time by surprise detection and classify the objects around it into static or dynamic ones. Moreover, surprise about unexpected events should influence the robot's action plans. Robust visual localization should enable it to retrieve its current position in the environment and to evaluate its current observation with respect to the already acquired reference model.

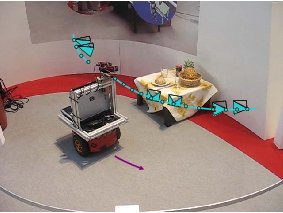

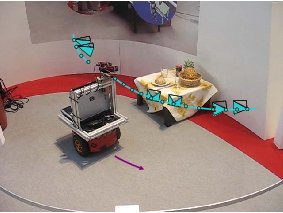

|

This figure shows the acquisition of an image sequence S1 with a stereo camera head (640x480 pixels) mounted on a Pioneer 3-DX robot during AUTOMATICA 2008. The robot went along an approximately circular trajectory around a table set with household objects like glasses, plates etc., with the stereo camera looking towards the objects and capturing 213 pairs of images. |

|

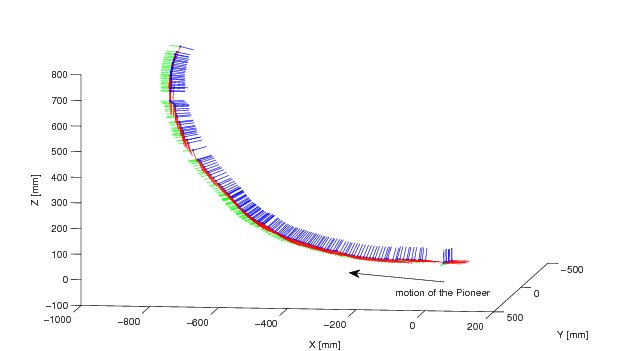

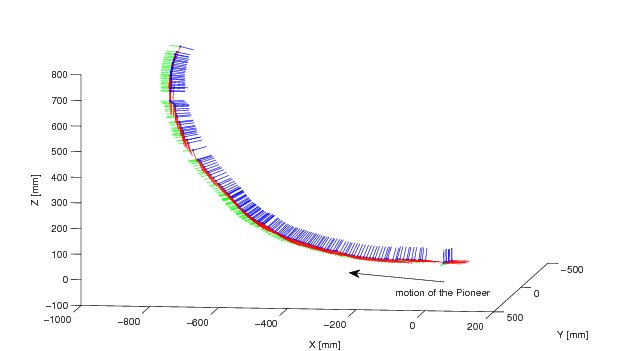

The visual localization results for image sequence S1 are illustrated in this figure. The time slots where the camera images could not be stored on the hard disk due to the swapping mechanism, can be easily detected as white gaps in the trajectory. However, swapping does not affect the accuracy of the used algorithm. |

|

Close up of the rendered scene. |

|

|

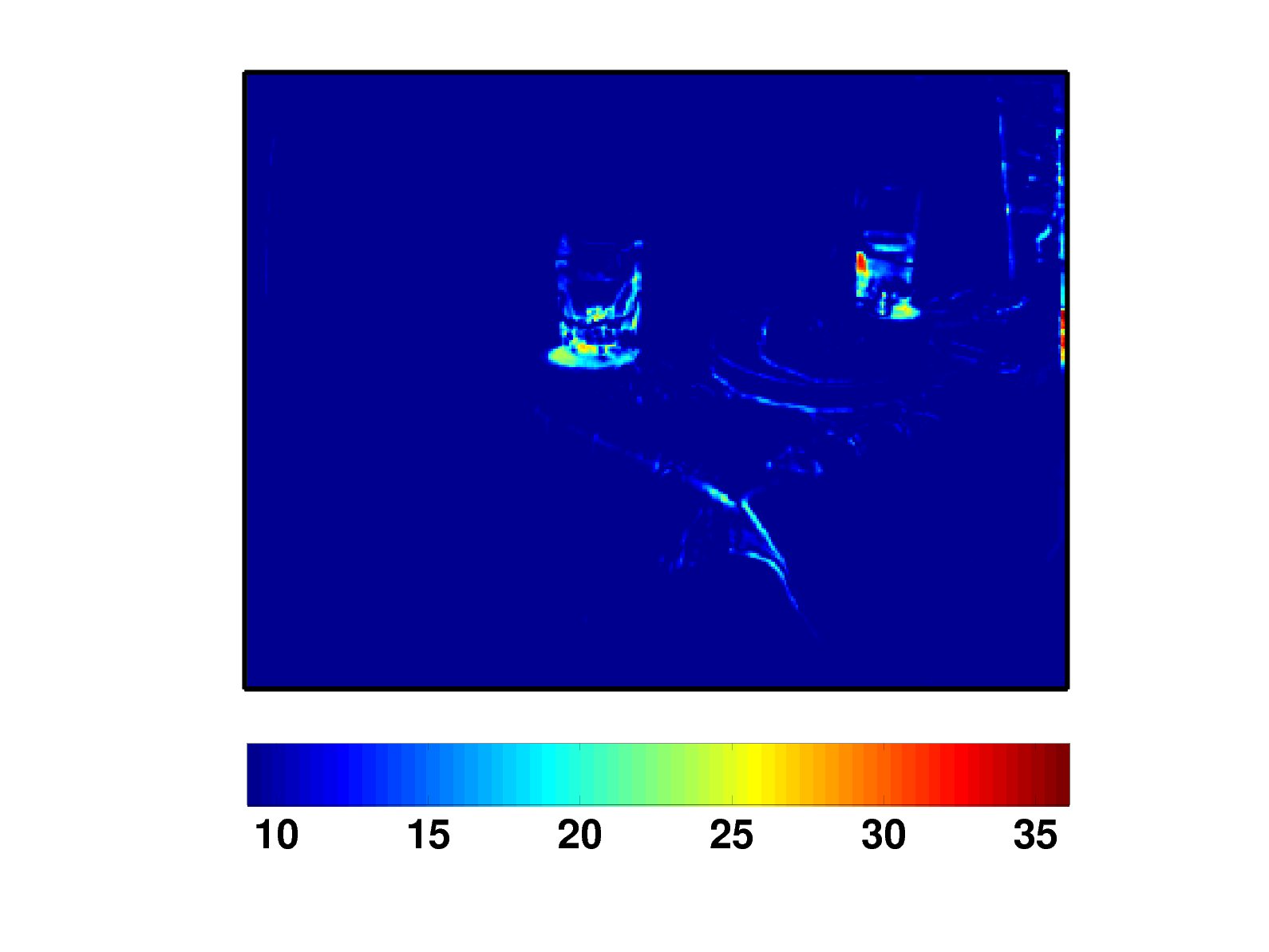

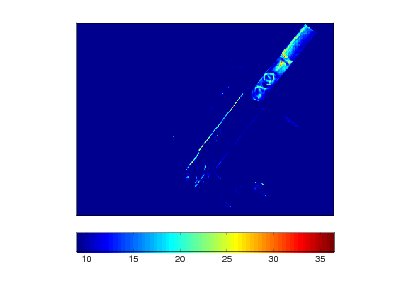

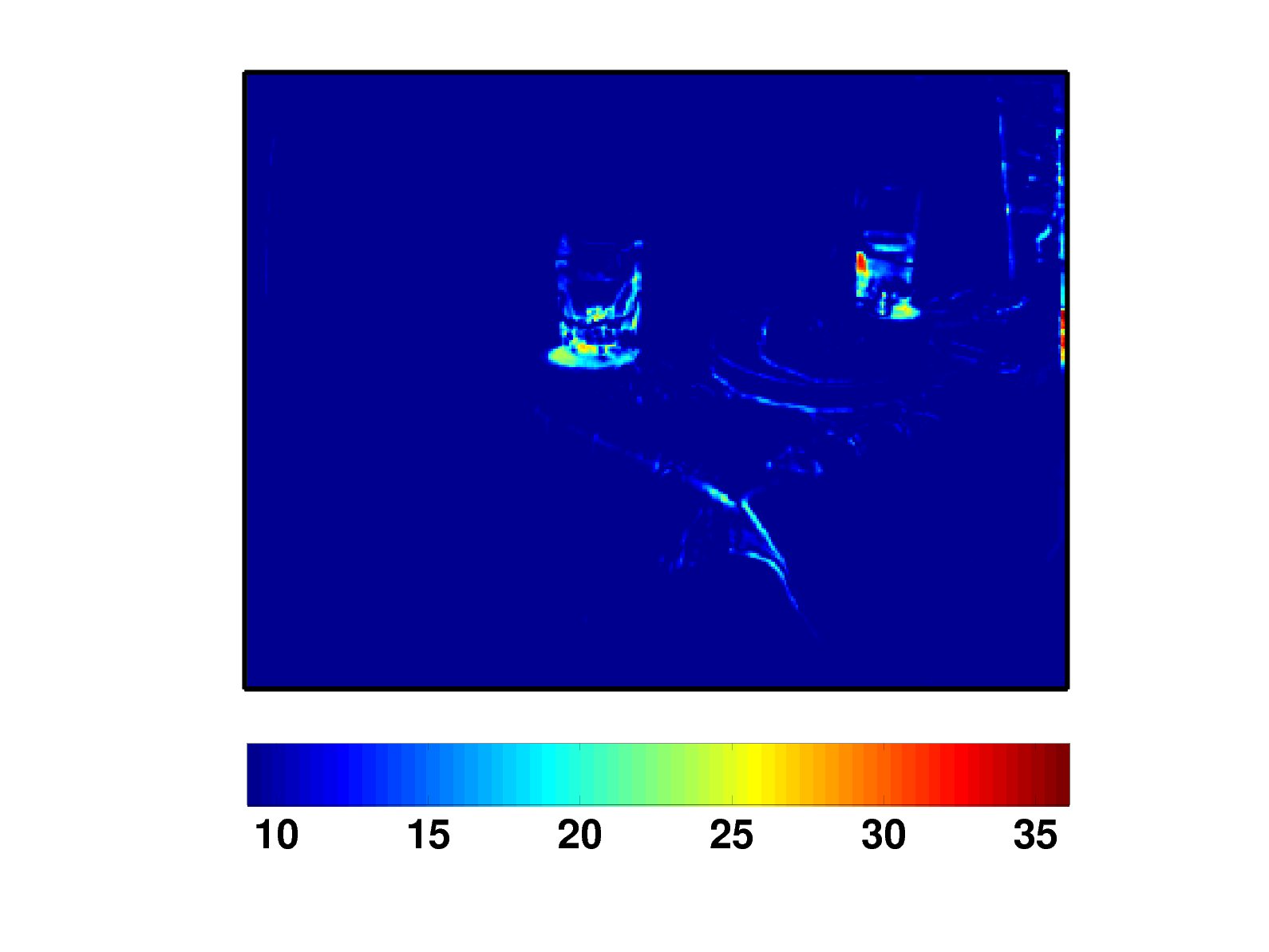

In order to test our algorithm for surprise detection we captured another image sequence S2 on a trajectory which was close to the first one but not identical. We changed the scene by removing the two glasses. The task of the cognitive system is to detect these changes.

This image is depicted in right figure together with a photorealistic virtual image (left figure) rendered from reference images which were selected only from image sequence S1. Note that there is no real camera image in S1 which was acquired exactly at the position of the observation. |

|

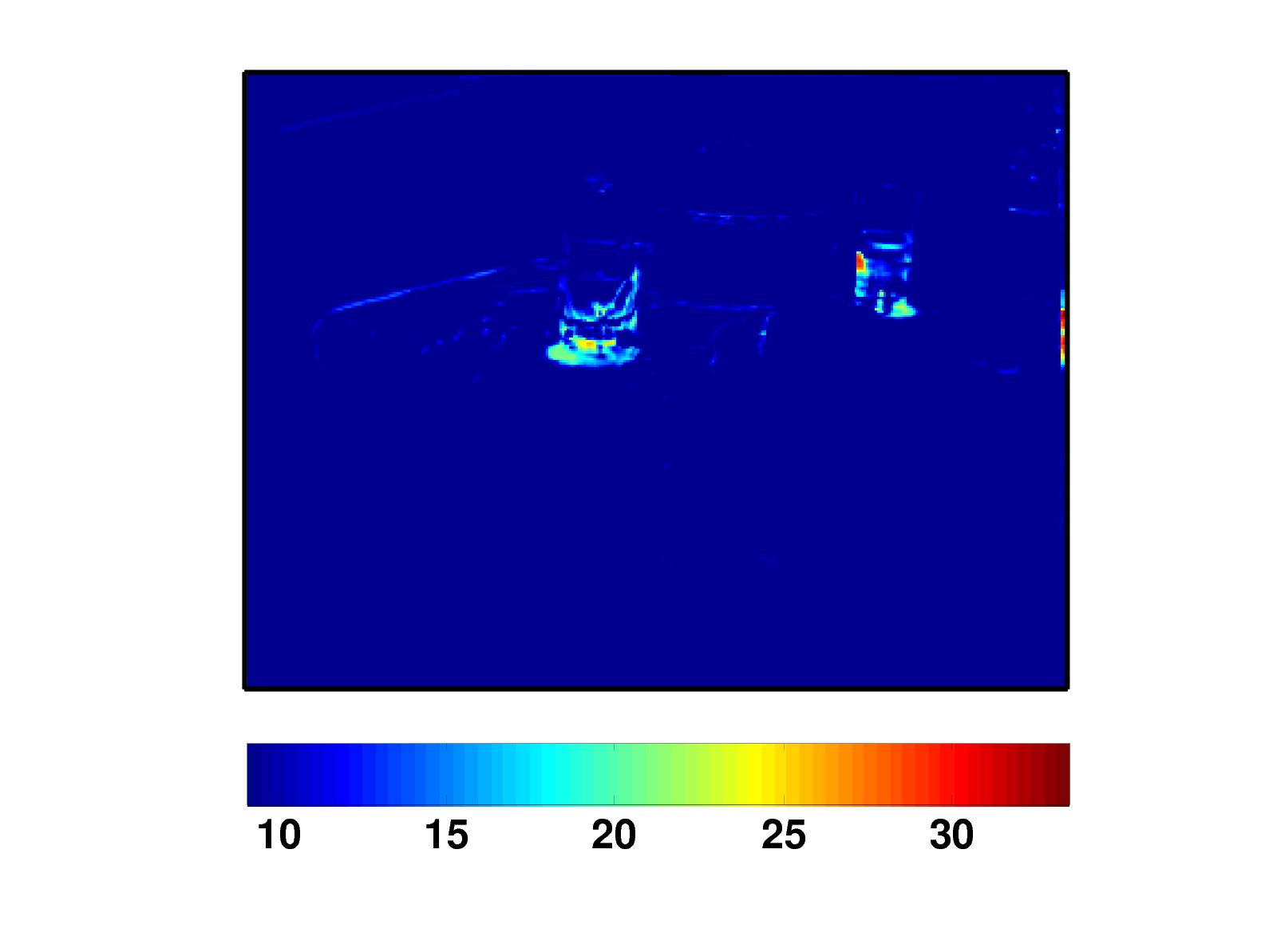

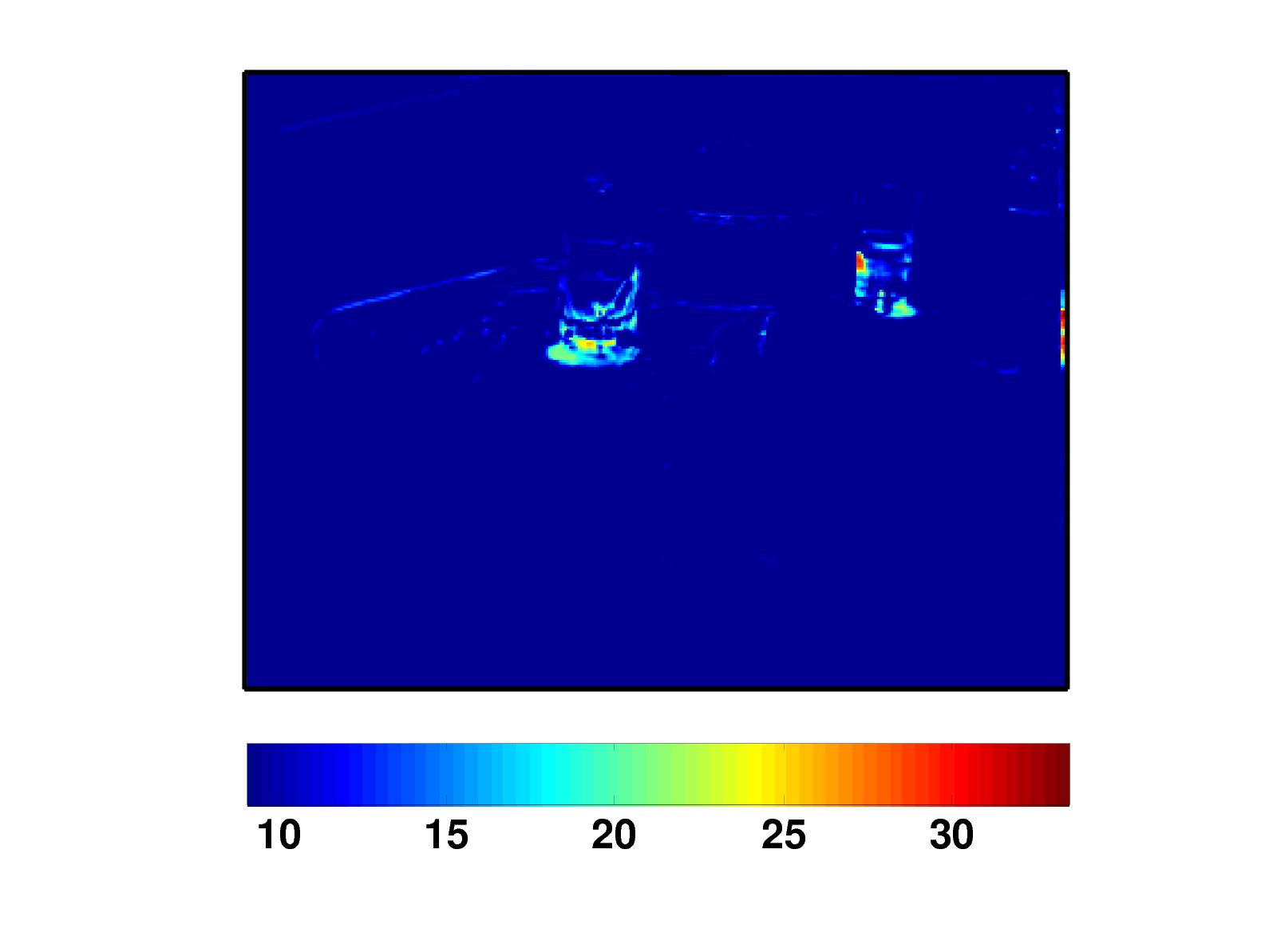

Applying our algorithm for surprise detection on the luminance signals of the two images, we obtained the surprise trigger shown in the right figure. The figure clearly shows a region of high KLD values around the missing glasses. |

|

|

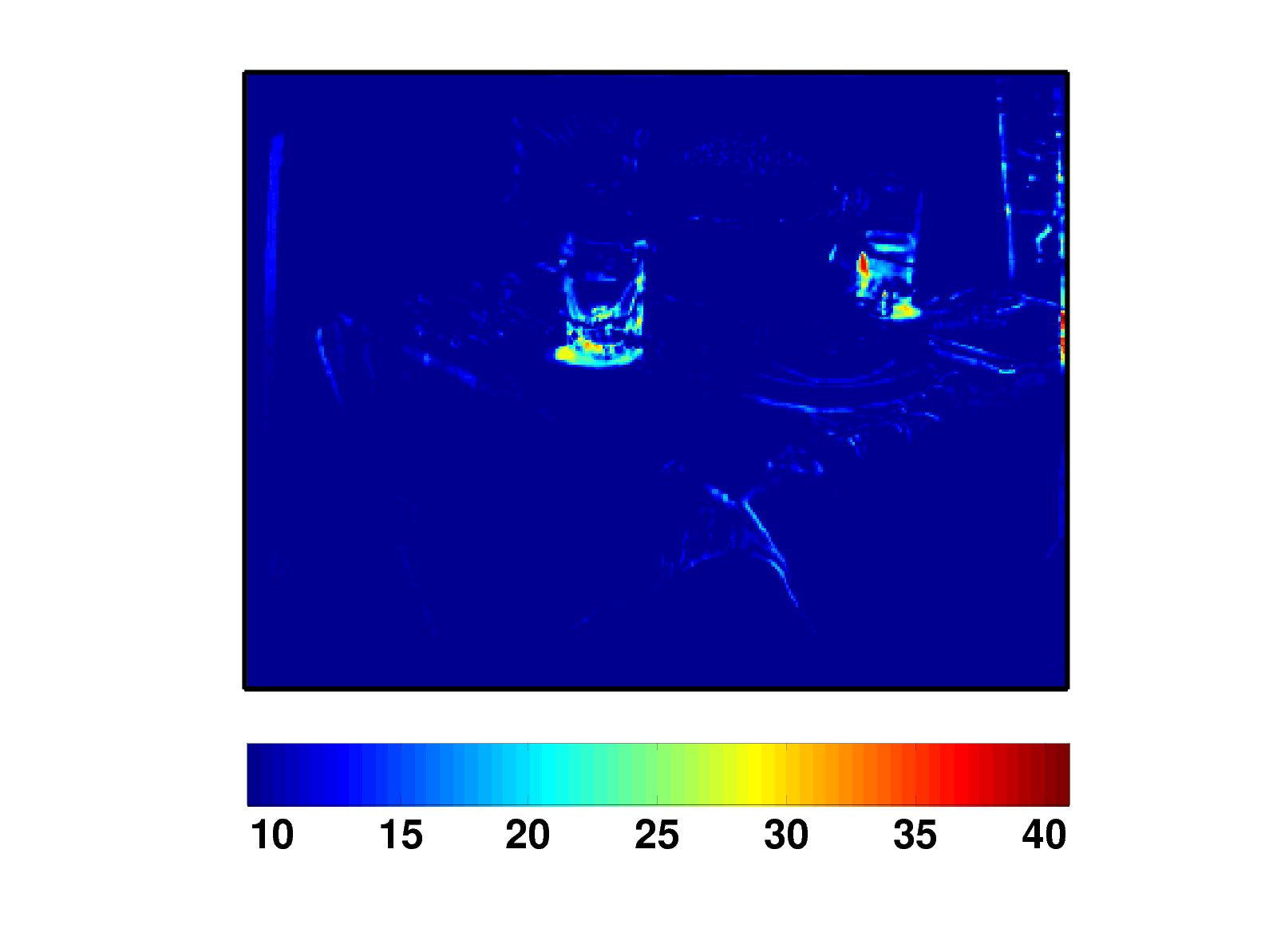

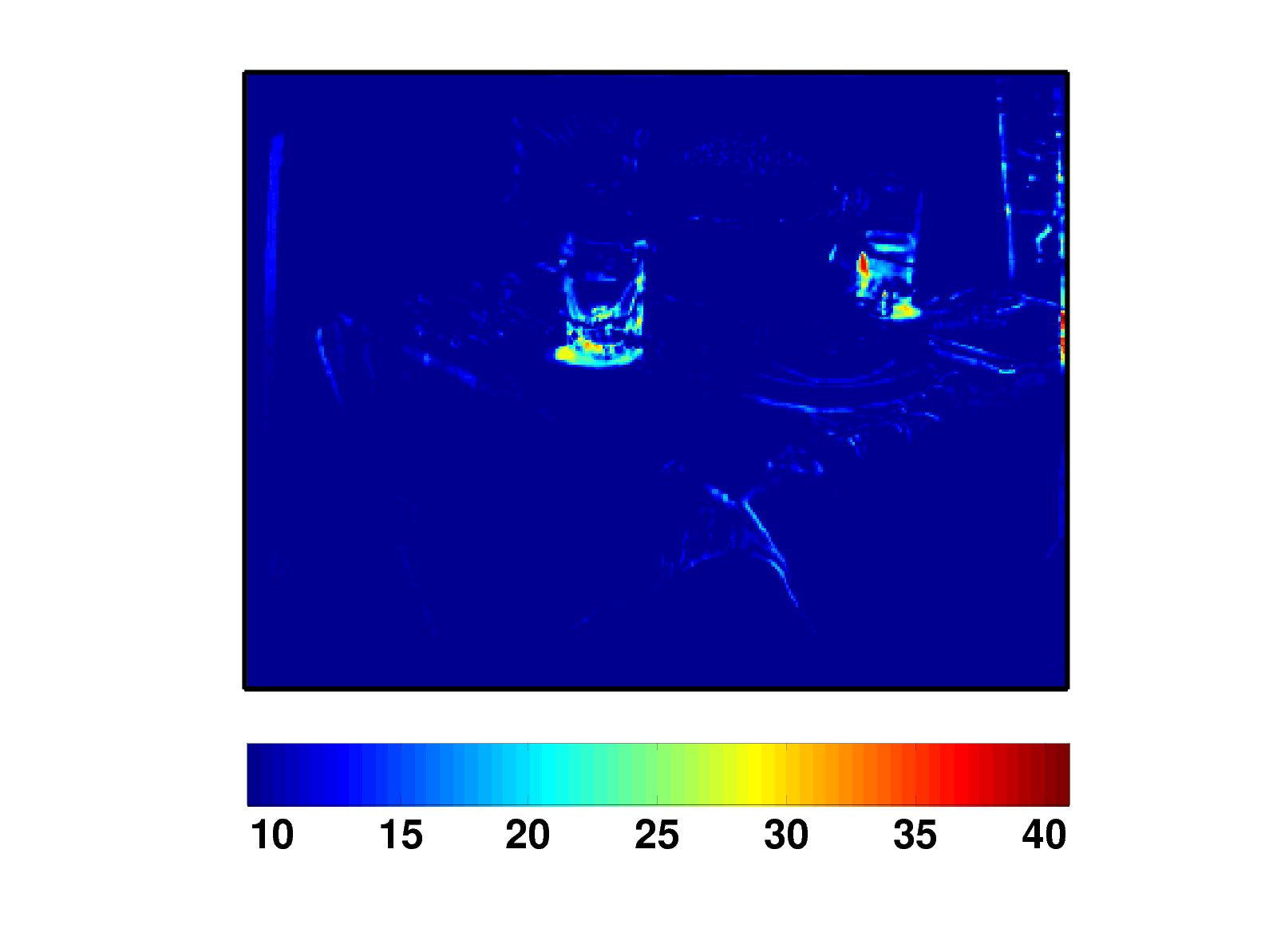

This figure shows results for surprise detection applying our two strategies for the homing problem. Since the pose is not that accurate in case of automatic localization, the surprise trigger is higher in regions where indeed no changes occured compared to previous figure. However, there is still a pronounced region with high surprise trigger around the missing glasses, compared to the rest of the surprise map. |

JAHIR - Cognitive Factory:

An episode which demonstrates joint action is planned in JAHIR. The goal is that a robot mounts a product while communicating with a human worker by speech and virtual buttons which are projected onto the working table. We are going to integrate our algorithms described in this work into the quality assessment step after the product is mounted. To this end, an image sequence of the mounted error-free product is first captured which is processed for a reference image-based model. Our algorithm for surprise detection detects unforeseen changes in the product which might be due to failures in the single production steps. It is desirable that the inspecting camera is localized with respect to the product and not with respect to the robot's coordinate system since this does not require that the product is exactly at the same position as during the acquisition of the reference model.

The cognitive robot uses this surprise trigger for making decisions about repair strategies and the next production steps.

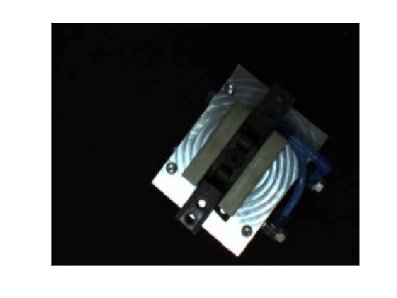

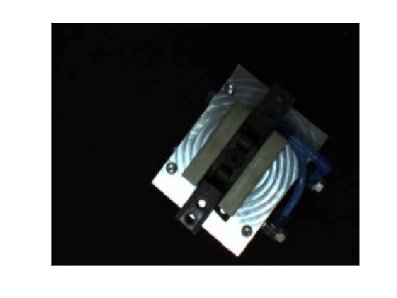

|

|

For experiments we acquired in collaboration with the JAHIR project group an image sequence of a workpiece using their demonstration robot. With this sequence the reference model has been generated. An image of a slightly modified workpiece simulates a faulty product after mounting. The left figure shows an image of the final product with a missing piece compared to the reference final product depicted in the virtual image in the right figure. Due to the black unicolor plate beneath the workpiece features are only found on the workpiece and hence the localization refers solely to that object. The issues which have to be tackled in this application are quite more complicated than in the cognitive kitchen. Only a small field of view can be used for feature extraction, which leads to an ill-conditioned pose estimation. Further, the workpiece is shiny and therefore bad landmarks and bad stereo-correspondences are found.

Nevertheless, we obtained acceptable results for the estimated poses and virtual images. |

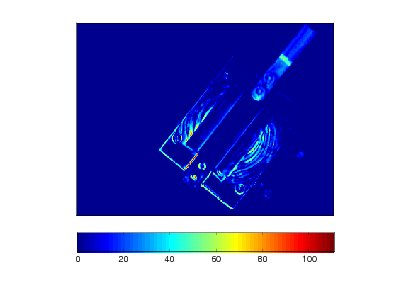

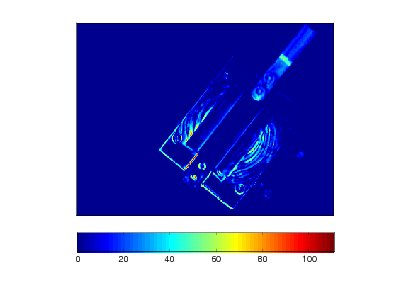

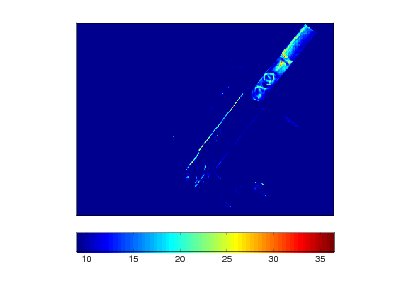

|

|

The left figure shows the pixel-wise absolute difference between the luminance of the previous figures. Simple differencing is widely used in image change detection. Obviously there are high values in the difference image where indeed no changes occured. This is because the reflected intensity depends much on the viewpoint. Thus, the average intensity of the reference images selected for the interpolation of the virtual image might differ from the intensity of the observation although there is no real change. Our method (right figure) clearly shows a more robust surprise trigger along the surface of the workpiece and mainly indicates the missing part of the workpiece. |

Further results:

Our visual localization algorithm was also applied to the DLR 3D-Modeler of the German Aerospace Centre. This first hand-held, online, only vision-based 3D modeling system has been presented to the public at AUTOMATICA 2008. In an application like online 3D modeling features like accuracy, speed and robustness are crucial. Our localization framework has been proven to satisfy all these demands and provide appropriate results.

People

- Prof. Dr.-Ing. Darius Burschka (Robotics and Embedded Systems - institute member)

- Prof. Dr.-Ing. Gerd Hirzinger (German Aerospace Center - DLR)

- Prof. Dr.-Ing. Eckehard Steinbach (Department of Electrical Engineering - TUM)

- Elmar Mair, M.Sc. (Robotics and Embedded Systems - institute member)

- Dipl.-Ing. Werner Maier (Department of Electrical Engineering - TUM)

Links

Video of our exhibit at the AUTOMATICA 2008 (CoTeSys stand) - 20MB

VEM(319)-JAHIR video - 6MB

Publications

|

[1]

|

Werner Maier, Fengqing Bao, Elmar Mair, Eckehard Steinbach, and Darius

Burschka.

Illumination-invariant image-based novelty detection in a cognitive

mobile robot's environment.

In Proceedings of the IEEE International Conference on Robotics

and Automation 2010 (ICRA'10), May 2010.

[ DOI |

.bib |

.pdf ]

|

|

[2]

|

Elmar Mair, Klaus H. Strobl, Michael Suppa, and Darius Burschka.

Efficient camera-based pose estimation for real-time applications.

In Proceedings of the IEEE/RSJ International Conference on

Intelligent Robots and Systems 2009 (IROS'09), October 2009.

[ DOI |

.bib |

.pdf ]

|

|

[3]

|

Werner Maier, Elmar Mair, Darius Burschka, and Eckehard Steinbach.

Visual homing and surprise detection for cognitive mobile robots

using image-based environment representations.

In Proceedings of the IEEE International Conference on Robotics

and Automation 2009 (ICRA'09), May 2009.

[ DOI |

.bib |

.pdf ]

|

|

[4]

|

Werner Maier, Elmar Mair, Darius Burschka, and Eckehard Steinbach.

Surprise detection and visual homing in cognitive technical systems.

In 1st International Workshop on Cognition for Technical

Systems, October 2008.

[ .bib |

.pdf ]

|

|

[5]

|

Elmar Mair, Werner Maier, Darius Burschka, and Eckehard Steinbach.

Image-based environment perception for cognitive technical systems.

In 1st International Workshop on Cognition for Technical

Systems, October 2008.

[ .bib |

.pdf ]

|